A Light Introduction to Reinforcement Learning

Introduction

Artificial Intelligent (AI) and Machine Learning (ML) are widely used in many companies to help them prepare for the future by predicting analysis based on historical data. However, traditional machine learning processes, no matter supervised, unsupervised, or even deep learning all need massive amounts of data to get good predictions. In this case, reinforcement learning, which mimics the way human beings learn and follows the curve of the human learning process, fascinates many researcher’s attention because agents will learn not only from historical data, but also from their own “experience” from trying it repeatedly with adapting previous execution results. Based on this mechanism, agents are able to improve their performances to adapt various situations.

In RL algorithms, a specific action for corresponding scenarios is not assigned, but instead the system must discover which actions yield the most reward to achieve a goal. The procedure is sort of a trial and error process. RL originally was used to study animal learning in psychology and train animals to mimic human learning ability. Today, RL is been widely used in many computer applications including games, self-taught AlphaGo etc.

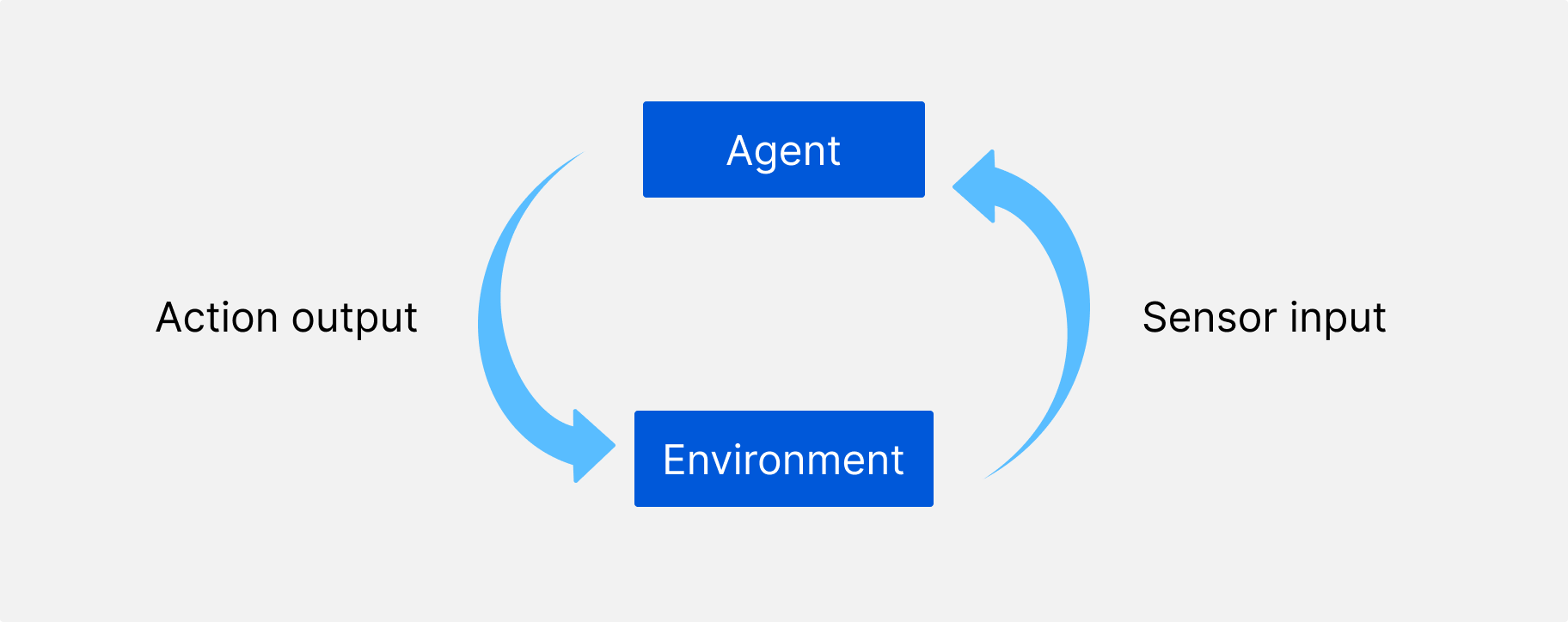

Decision-making systems are designed to collect various information from different sources and to interact from different agents to accomplish optimal goals. An intelligent agent is an algorithm that is capable of independent action on behalf of its user or owner, situated in certain environments, and capable of autonomous action in this environment according Michael Wooldridge in his book of “An introduction to Multiagent systems”. The intelligent agent has reactive, proactive and social properties. In general, an agent can perceive an ongoing interaction with its environment, and respond to changes that occur. Figure 1 shows how an agent reacts within a perceived environment and acts to change the environment.

An agent can use RL algorithms to maximize long-term profit in its interactions with the environment, just like human learning process. There are many situations where a single reinforcement learning agent is not able to solve the real-world problem, especially with regard to the decision-making process. Like human beings, many agents can collaborate and cooperate to achieve complicated situations. A multi-agent system (MAS) is the kind of software system that includes a number of intelligent agents interacting with one-another so that it is able to reach goals that are difficult to achieve by an individual agent. Typically, agents exchange messages through specific agent communication languages. In the most general case, agents are acting on behalf of users with different goals and motivations. In order to successfully interact, they require the ability to cooperate, coordinate, and possibly negotiate with each other. When we design a MAS, we need to implement micro and macro designs: agent design and society design. During the agent design, we think about how to build agents capable of independent, autonomous action so that they can successful carry out tasks users delegate to them. In society design, the focus is on the interaction capabilities (cooperation, coordination, and negotiation) in order to achieve the delegated goals, especially when some conflicts arise in agent goals. This article focuses on agent design with RL technology.

Reinforcement Learning for Intelligent Agent

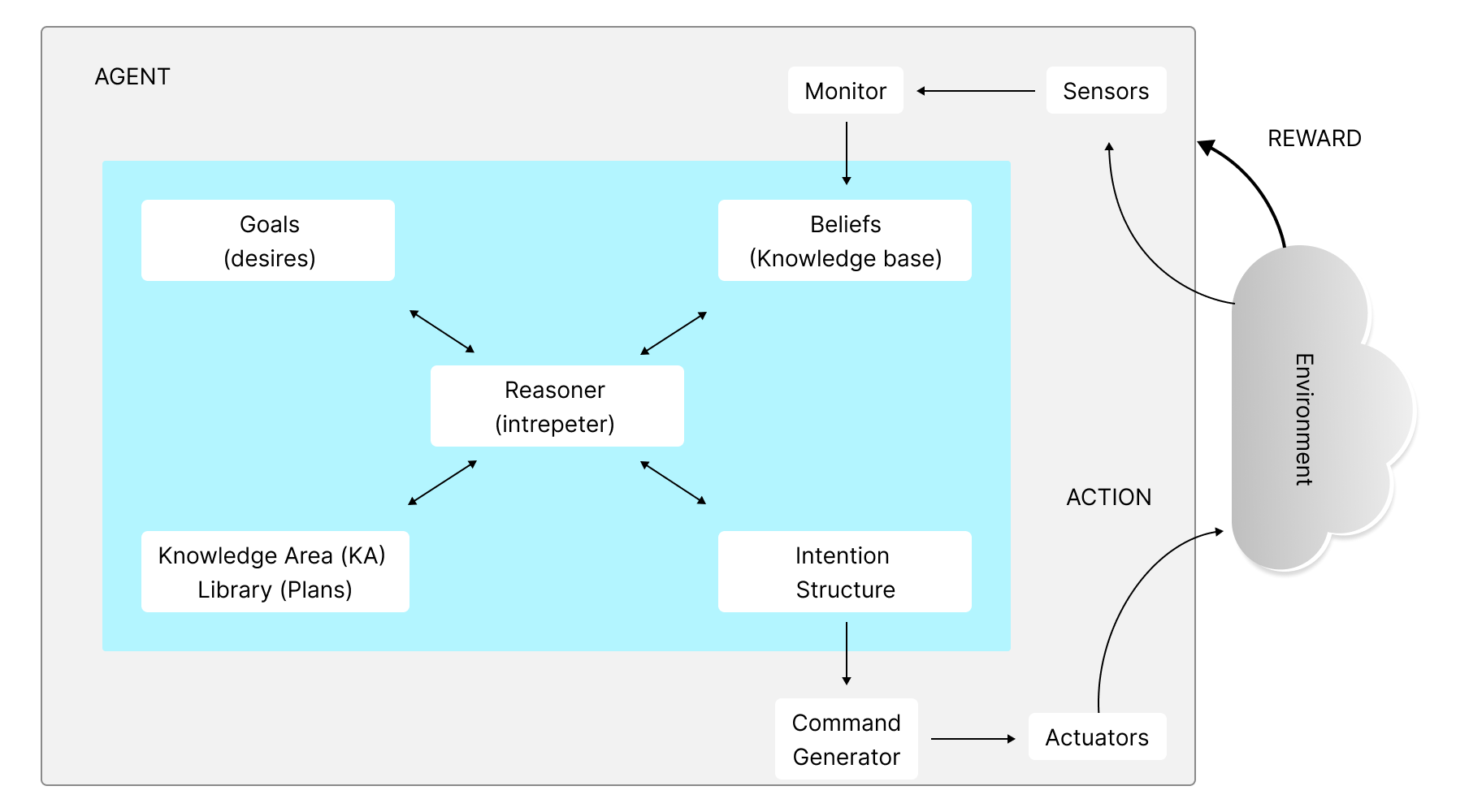

In general agent design, agent architecture specifies agent component modules and interaction among these modules. Procedural Reasoning System (PRS) architecture builds its agent systems with Reinforcement Learning methods. Figure 2 shows the PRS agent architecture with reinforcement learning.

In this PRS agent architecture, an agent inputs data through sensors, which is received from exploring the environment and compute actions based on its knowledge and rewards to carry out the goals. Since reinforcement learning is instigated by a Trial-and-Error learning procedure an agent interacts directly with the environment and teaches itself over time, thus eventually achieving its designated goal. The process is repeated to find the bigger rewards than the previous optimal rewards to keep improving the agent’s performance.

Beyond the agent and environment, interaction between agent and environment are described via three essential elements (a policy, a reward signal, and a value function) plus a model of the environment (optional, only for model-based RL). In agent-environment interface, there are set of state S, set of action A, the essential elements of Reinforcement Learning can be defined as:

A policy π (π(a|s)) determines behavior in reinforcement learning through a mapping from a state s of the environment to action a taken from that state. In an uncertain system, policies are stochastic because actions are probabilistic. Most cases in the real-world are stochastic systems.

A reward: A reward is a score of an action. It shows how many points of an action an agent can get if it moves from current state to the next state by executing this action. It is defined by a reward signal. An agent tries to maximize the total rewards it receives over the long run. Every policy will have its reward. For example, an agent at state st performs a corresponding action at to state st+1, the environment then provides a feedback reward rt+1 to agent. Therefore, the agent knows that the policy π for action at at state st is rt+1. An agent has a series of policies that improve over time to pursue better performance. This process is called policy improvement.

The probability of a reward of the preceding state s and action a is:

p(s′,r|s,a)=.Pr{St+1=s′, Rt+1=r|St=s, At=a}

A value function: A value function specifies long run earning. The value of a state s is the accumulation of rewards of an agent from state s to final state sn. It is calculated by obtaining the expecting return value from state s. Value functions usually define ordering over policies. Itis used to evaluate how “good” a certain policy for an agent to achieve its goals. The value function of a state s under a policy π, denoted vπ(s), is the expected return when stating in s and following policy π. The expected return is defined as a function of the reward sequence. The simplest expected return function is the sum of the rewards.

vπ(st)= rt+1+rt+2+…+rT = ∑k=0Trt+k+1

Bellman equation for vπ(st) expresses the values of state s and the value of its possible successor states: vπ(s)=.∑aπ(a|s)∑s′,rp(s′,r|s,a)[r+γvπ(s′), where γ, 0≤γ≤1, is the discount rate that determines the present value of future rewards: a reward received k time steps in the future is worth only γk−1 times what it would be worth if it were received immediately.

Bellman equation calculates the value function between two consecutive states. If value function at state s over policy π is greater than the value function over policy π', we consider policy π is better than policy π. This equation is widely used in policy improvement to optimal policy.

A model of the environment (optional, only for model-based RL): The model of the environment usually can be expressed as a finite state for deterministic model or Markov Decision Process (MDP) for probabilistic model. A model mimics the behavior of the environment.

We will use a simple case to illustrate the general concept of reinforcement learning.

Case Study

The Markov Decision Process (MDP) is a formal method involving evaluative feedback by receiving different rewards for choosing different actions in different situations. It is an idealized form of the reinforcement learning problem. Here we will use a simple example to help you understand policy, reward, value function, etc. The example demonstrates how an agent interacts with environment in a Markov decision process.

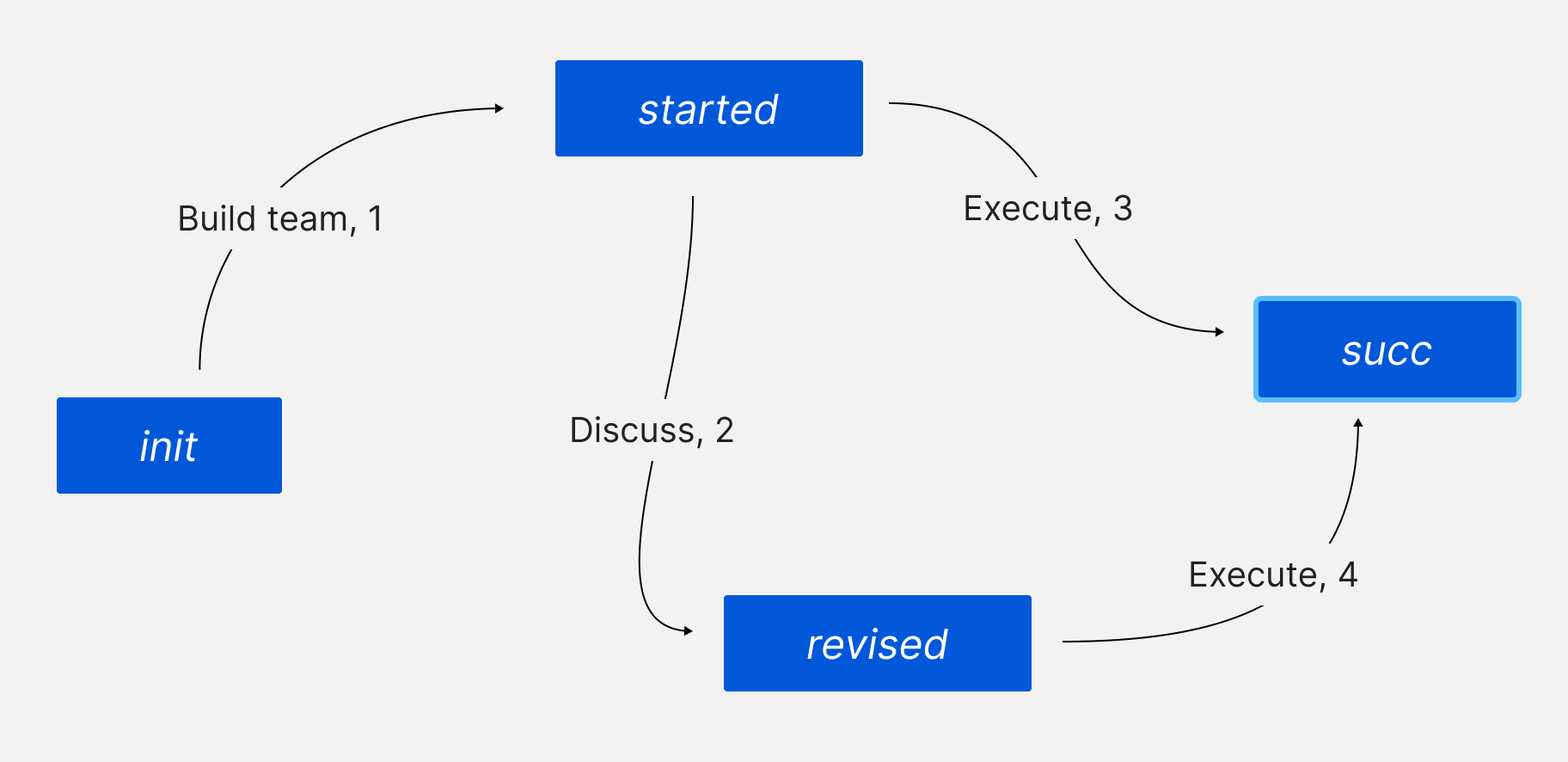

More specifically, we assume that there are four possible states S {init, started, revised, succ} representing the environment that the agent may be at and explore in a system; three actions {Build team, Execute, Discuss} it may execute, and corresponding reward R after it execute an action at certain state. To simplify the example, we ignore failure state. At each time step t (t=0,1,2,3……), the agent receives specific representation of the environment’s state, st and executes an action, at. One time step later, the agent receives a reward rt+1 and environment state, st+1 as a consequence of its action.

The policies at state s1 either is (1) π(started) → Execute or (2) π(started) → Discuss

The reward is a number that an agent receives from executing a policy. In our example, the reward for policy (1) is 3, for policy (2) is 1.

The value from state started to state succ via policy (1) is 3. The value from state started to state succ via policy (2) is 5.

In real-world, it is complicated to create an environment model. Even if you can build the model, most of the time the models are non deterministic. For instance, we may not be successful after we execute our plan, let's say, if we do not revise our plan; we may have 70% chance to fail our project. After we revised our plan, we may have 30% chance to fail. In this case, when we calculate the value function, we need to consider the probability of the condition.

Finding a policy that achieves maximum reward over the long run is the main task for a reinforcement learning algorithm. Most of the real-time rewards for agents in the systems will vary in different situations. Agents mainly rely on trial and feedback rewards to learn. Every action will change the rewards and followed value functions. Systems will keep updating values functions on states and actions based on their trials and feedback rewards.

Conclusion

Reinforcement Learning simulates the human being’s decision-making processes. It helps an agent keep optimal its goals by conducting a trial-and-error procedure to gain experiences and to improve its performance. We can use RL algorithms to design agents. Innovation management involves multiple departments, areas and directions and therefore multiple agents can be used in decision making systems to discover and improve decisions. Those agents need to negotiate with each other to optimize the whole values, which involves agent social design.

References

[2] Sutton, R. S., & Barto, A. G. (2018). Reinforcement Learning: An Introduction. The MIT Press.

[3] Wooldridge, M. (2002). An introduction to MultiAent systems. John Wiley & Sons.